Data is the fuel of the digital economy, but not all data is equally useful or accessible. If you want to gain insights from your data and make data-driven decisions, you need to store, organize and analyze your data in a way that suits your business needs and goals.

One way to do that is to use a data mart. A data mart is a subset of a data warehouse that focuses on a specific business area, department or topic. Data marts provide specific data to a defined group of users, allowing them to access critical insights quickly without having to search through an entire data warehouse.

In this post, we will explain what a data mart is, how it differs from a data warehouse and a data lake, and what are the benefits and challenges of using a data mart.

What is a data warehouse?

Before we dive into data marts, let's first understand what a data warehouse is. A data warehouse is a centralized repository that stores the historical and current data of an entire organization. Data warehouses typically contain large amounts of data, including historical data.

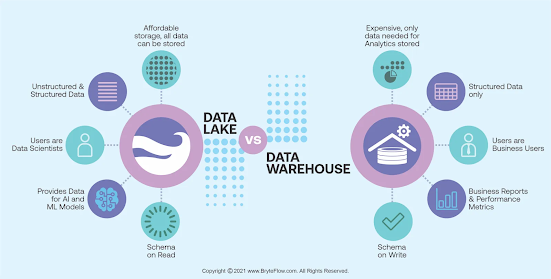

The data in a data warehouse comes from various sources, such as application log files and transactional applications. A data warehouse stores structured data, which has a well-defined purpose and schema.

A data warehouse is designed to support business intelligence (BI) and analytics applications. It enables users to run complex queries and reports on the data, as well as perform advanced analytics techniques such as data mining, machine learning, etc.

A data warehouse follows the ETL (extract-transform-load) process, which involves extracting data from various sources, transforming it into a common format and structure, and loading it into the data warehouse.

What is a data lake?

Another concept that is related to data marts is a data lake. A data lake is a scalable storage platform that stores large amounts of structured and unstructured data (such as social media or clickstream data) and makes them immediately available for analytics, data science and machine learning use cases in real time.

With a data lake, the data is ingested in its original form, without any changes. The main difference between a data lake and a data warehouse is that data lakes store raw data, without any predefined structure or schema. Organizations do not need to know in advance how the data will be used.

A data lake follows the ELT (extract-load-transform) process, which involves extracting data from various sources, loading it into the data lake as-is, and transforming it when needed for analysis.

What is a data mart?

A data mart is a subset of a data warehouse that focuses on a specific business area, department or topic, such as sales, finance or marketing. Data marts provide specific data to a defined group of users, allowing them to access critical insights quickly without having to search through an entire data warehouse.

Data marts draw their data from fewer sources than data warehouses. The sources of data marts can include internal operational systems, a central data warehouse and external data.

Data marts are usually organized by subject or function. For example, a sales data mart may contain information about customers, products, orders, revenue, etc. A marketing data mart may contain information about campaigns, leads, conversions, etc.

Data marts are designed to support fast and easy query processing and reporting for specific business needs and goals. They enable users to run predefined and parameterized queries on the data, as well as create dashboards and visualizations.

Data marts can be created from an existing data warehouse - top-down approach - or from other sources - bottom-up approach. Similar to a data warehouse, a data mart is a relational database that stores transactional data (time values, numeric orders, references to one or more objects) in columns and rows to simplify organization and access.

What are the benefits of using a data mart?

Some of the benefits of using a data mart are:

• Relevance: A data mart provides relevant and specific information to a particular group of users who share common business interests and goals. It eliminates the need for users to sift through irrelevant or unnecessary information in a larger database.

• Performance: A data mart improves the performance and efficiency of query processing and reporting. It reduces the complexity and size of the queries by limiting the scope of the data. It also reduces the load on the central database by distributing the queries across multiple databases.

• Agility: A data mart enables faster and easier development and deployment of BI and analytics solutions. It allows users to create their own queries and reports without relying on IT staff or waiting for long development cycles.

• Security: A data mart enhances the security and privacy of the data by restricting the access to authorized users only. It also allows for better data governance and compliance by applying specific rules and policies to the data.

What are the challenges of using a data mart?

Some of the challenges of using a data mart are:

• Data quality: A data mart depends on the quality and accuracy of the data that feeds it. If the source data is incomplete, inconsistent or outdated, the data mart will reflect that and produce unreliable results.

• Data integration: A data mart requires data integration from various sources and formats into a common format and structure. This may involve transforming, enriching or aggregating the data using ETL or ELT processes.

• Data maintenance: A data mart requires regular maintenance and updates to keep up with the changing business needs and goals. This may involve adding, modifying or deleting data, as well as adjusting the schema and structure of the database.

• Data consistency: A data mart may create data inconsistency or redundancy issues if it is not aligned with the central data warehouse or other data marts. This may lead to confusion, errors or conflicts among users and applications.

How to get started with a data mart?

If you are interested in building and deploying a data mart in the cloud, you can use IBM as your platform. IBM offers a range of services and tools that can help you with every aspect of your data mart project, from data ingestion and storage to data processing and analytics.

Some of the IBM services and tools that you can use for your data mart are:

• IBM Db2 Warehouse: A fully managed cloud data warehouse service that supports online analytical processing (OLAP) workloads. You can use IBM Db2 Warehouse to store and query structured and semi-structured data using SQL.

• IBM Cloud Pak for Data: A fully integrated data and AI platform that supports online transaction processing (OLTP) and online analytical processing (OLAP) workloads. You can use IBM Cloud Pak for Data to store, manage and analyze your data using various services, such as IBM Db2, IBM Netezza Performance Server, IBM Watson Studio, etc.

• IBM Cloud Object Storage: A highly scalable, durable and secure object storage service that can store any amount and type of data. You can use IBM Cloud Object Storage as the foundation of your data lake, and organize your data into buckets and objects.

• IBM DataStage: A fully managed ETL service that can extract, transform and load your data from various sources into your data warehouse or data lake. You can use IBM DataStage to integrate, cleanse and transform your data using serverless jobs.

• IBM Cognos Analytics: A cloud-based business intelligence service that can connect to your data sources and provide interactive dashboards and visualizations. You can use IBM Cognos Analytics to explore and share insights from your data in your data mart.

I hope this post has given you a clear overview of what a data mart is and how it can help your business. If you have any questions or feedback, please leave a comment below.